Winner-take-all (computing)

Winner-take-all is a computational principle applied in computational models of neural networks by which neurons compete with each other for activation. In the classical form, only the neuron with the highest activation stays active while all other neurons shut down; however, other variations allow more than one neuron to be active, for example the soft winner take-all, by which a power function is applied to the neurons.

Neural networks

In the theory of artificial neural networks, winner-take-all networks are a case of competitive learning in recurrent neural networks. Output nodes in the network mutually inhibit each other, while simultaneously activating themselves through reflexive connections. After some time, only one node in the output layer will be active, namely the one corresponding to the strongest input. Thus the network uses nonlinear inhibition to pick out the largest of a set of inputs. Winner-take-all is a general computational primitive that can be implemented using different types of neural network models, including both continuous-time and spiking networks.[1][2]

Winner-take-all networks are commonly used in computational models of the brain, particularly for distributed decision-making or action selection in the cortex. Important examples include hierarchical models of vision,[3] and models of selective attention and recognition.[4][5] They are also common in artificial neural networks and neuromorphic analog VLSI circuits. It has been formally proven that the winner-take-all operation is computationally powerful compared to other nonlinear operations, such as thresholding.[6]

In many practical cases, there is not only one single neuron which becomes active but there are exactly k neurons which become active for a fixed number k. This principle is referred to as k-winners-take-all.

Example algorithm

Consider a single linear neuron, with inputs . Each input has weight , and the output of the neuron is . In the Instar learning rule, on each input vector, the weight vectors are modified according to where is the learning rate.[7] This rule is unsupervised, since we need just the input vector, not a reference output.

Now, consider multiple linear neurons . The output of each satisfies .

In the winner-take-all algorithm, the weights are modified as follows. Given an input vector , each output is computed. The neuron with the largest output is selected, and the weights going into that neuron are modified according to the Instar learning rule. All other weights remain unchanged.[8]

The k-winners-take-all rule is similar, except that the Instar learning rule is applied to the weights going into the k neurons with the largest outputs.[8]

Circuit example

A simple, but popular CMOS winner-take-all circuit is shown on the right. This circuit was originally proposed by Lazzaro et al. (1989)[9] using MOS transistors biased to operate in the weak-inversion or subthreshold regime. In the particular case shown there are only two inputs (IIN,1 and IIN,2), but the circuit can be easily extended to multiple inputs in a straightforward way. It operates on continuous-time input signals (currents) in parallel, using only two transistors per input. In addition, the bias current IBIAS is set by a single global transistor that is common to all the inputs.

The largest of the input currents sets the common potential VC. As a result, the corresponding output carries almost all the bias current, while the other outputs have currents that are close to zero. Thus, the circuit selects the larger of the two input currents, i.e., if IIN,1 > IIN,2, we get IOUT,1 = IBIAS and IOUT,2 = 0. Similarly, if IIN,2 > IIN,1, we get IOUT,1 = 0 and IOUT,2 = IBIAS.

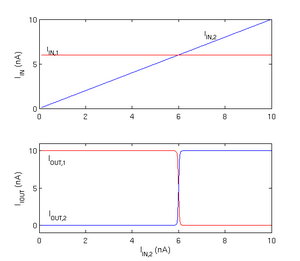

A SPICE-based DC simulation of the CMOS winner-take-all circuit in the two-input case is shown on the right. As shown in the top subplot, the input IIN,1 was fixed at 6nA, while IIN,2 was linearly increased from 0 to 10nA. The bottom subplot shows the two output currents. As expected, the output corresponding to the larger of the two inputs carries the entire bias current (10nA in this case), forcing the other output current nearly to zero.

Other uses

In stereo matching algorithms, following the taxonomy proposed by Scharstein and Szelliski,[10] winner-take-all is a local method for disparity computation. Adopting a winner-take-all strategy, the disparity associated with the minimum or maximum cost value is selected at each pixel.

It is axiomatic that in the electronic commerce market, early dominant players such as AOL or Yahoo! get most of the rewards. By 1998, one study[clarification needed] found the top 5% of all web sites garnered more than 74% of all traffic.

The winner-take-all hypothesis in economics suggests that once a technology or a firm gets ahead, it will do better and better over time, whereas lagging technology and firms will fall further behind. See First-mover advantage.

See also

References

- ^ Grossberg, Stephen (1982), "Contour Enhancement, Short Term Memory, and Constancies in Reverberating Neural Networks", Studies of Mind and Brain, Boston Studies in the Philosophy of Science, vol. 70, Dordrecht: Springer Netherlands, pp. 332–378, doi:10.1007/978-94-009-7758-7_8, ISBN 978-90-277-1360-5, retrieved 2022-11-05

- ^ Oster, Matthias; Rodney, Douglas; Liu, Shih-Chii (2009). "Computation with Spikes in a Winner-Take-All Network". Neural Computation. 21 (9): 2437–2465. doi:10.1162/neco.2009.07-08-829. PMID 19548795. S2CID 7259946.

- ^ Riesenhuber, Maximilian; Poggio, Tomaso (1999-11-01). "Hierarchical models of object recognition in cortex". Nature Neuroscience. 2 (11): 1019–1025. doi:10.1038/14819. ISSN 1097-6256. PMID 10526343. S2CID 8920227.

- ^ Carpenter, Gail A. (1987). "A massively parallel architecture for a self-organizing neural pattern recognition machine". Computer Vision, Graphics, and Image Processing. 37 (1): 54–115. doi:10.1016/S0734-189X(87)80014-2.

- ^ Itti, Laurent; Koch, Christof (1998). "A Model of Saliency-Based Visual Attention for Rapid Scene Analysis". IEEE Transactions on Pattern Analysis and Machine Intelligence. 20 (11): 1254–1259. doi:10.1109/34.730558. S2CID 3108956.

- ^ Maass, Wolfgang (2000-11-01). "On the Computational Power of Winner-Take-All". Neural Computation. 12 (11): 2519–2535. doi:10.1162/089976600300014827. ISSN 0899-7667. PMID 11110125. S2CID 10304135.

- ^ Grossberg, Stephen (1969-06-01). "Embedding fields: A theory of learning with physiological implications". Journal of Mathematical Psychology. 6 (2): 209–239. doi:10.1016/0022-2496(69)90003-0. ISSN 0022-2496.

- ^ a b B. M. Wilamowski, “Neural Networks Learning” Industrial Electronics Handbook, vol. 5 – Intelligent Systems, 2nd Edition, chapter 11, pp. 11-1 to 11-18, CRC Press 2011.

- ^ Lazzaro, J.; Ryckebusch, S.; Mahowald, M. A.; Mead, C. A. (1988-01-01). "Winner-Take-All Networks of O(N) Complexity". Fort Belvoir, VA. doi:10.21236/ada451466.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Scharstein, Daniel; Szeliski, Richard (2002). "A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms". International Journal of Computer Vision. 47 (1/3): 7–42. doi:10.1023/A:1014573219977. S2CID 195859047.