Hawthorne effect

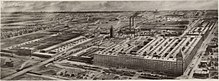

The Hawthorne effect is a type of human behavior reactivity in which individuals modify an aspect of their behavior in response to their awareness of being observed.[1][2] The effect was discovered in the context of research conducted at the Hawthorne Western Electric plant; however, some scholars think the descriptions are fictitious.[3]

The original research involved workers who made electrical relays at the Hawthorne Works, a Western Electric plant in Cicero, Illinois. Between 1924 and 1927, the lighting study was conducted, wherein workers experienced a series of lighting changes that were said to increase productivity. This conclusion turned out to be false.[3] In an Elton Mayo study that ran from 1927 to 1928, a series of changes in work structure were implemented (e.g. changes in rest periods) in a group of six women. However, this was a methodologically poor, uncontrolled study from which no firm conclusions could be drawn.[4] Elton Mayo later conducted two additional experiments to study the phenomenon: the mass interviewing experiment (1928-1930) and the bank wiring observation experiment (1931-32).

One of the later interpretations by Henry Landsberger, a sociology professor at UNC-Chapel Hill,[5] suggested that the novelty of being research subjects and the increased attention from such could lead to temporary increases in workers' productivity.[6] This interpretation was dubbed "the Hawthorne effect".

History

The term "Hawthorne effect" was coined in 1953 by John R. P. French[7] after the Hawthorne studies were conducted between 1924 and 1932 at the Hawthorne Works (a Western Electric factory in Cicero, outside Chicago). The Hawthorne Works had commissioned a study to determine if its workers would become more productive in brighter or dimmer levels of light. The workers' productivity seemed to improve when changes were made but returned to their original level when the study ended. It has been alternatively suggested that the workers' productivity increased because they were motivated by interest being shown in them.[8]

This effect was observed for minute increases in illumination. In these lighting studies, light intensity was altered to examine the resulting effect on worker productivity. When discussing the Hawthorne effect, most industrial and organizational psychology textbooks refer almost exclusively to the illumination studies as opposed to the other types of studies that have been conducted.[9]

Although early studies focused on altering workplace illumination, other changes such as maintaining clean work stations, clearing floors of obstacles, and relocating workstations have also been found to result in increased productivity for short periods of time. Thus, the Hawthorne effect can apply to a cause or causes other than changing lighting.[6][10][11]

Illumination experiment

The illumination experiment was conducted from 1924 to 1927. The purpose was to determine the effect of light variations on worker productivity. The experiment ran in two rooms: the experiment room, in which workers went about their workday under various light levels; and the control room, in which workers did their tasks under normal conditions. The hypothesis was that as the light level was increased in the experiment room, productivity would increase.

However, when the intensity of light was increased in the experiment room, researchers found that productivity had improved in both rooms. The light level in the experiment room was then decreased, and the results were the same: increased productivity in both rooms. Productivity only began to decrease in the experiment room when the light level was reduced to about the level of moonlight, which made it hard to see.

Ultimately it was concluded that illumination did not have any effect on productivity and that there must have been some other variable causing the observed productivity increases in both rooms. Another phase of experiments was needed to pinpoint the cause.

Relay assembly experiments

In 1927, researchers conducted an experiment where they chose two female workers as test subjects and asked them to choose four other women to join the test group. Until 1928, the team of women worked in a separate room, assembling telephone relays.

Output was measured mechanically by counting how many finished relays each worker dropped down a chute. To establish a baseline productivity level, the measurement was begun in secret two weeks before the women were moved to the experiment room, and then continued throughout the study. In the experiment room, a supervisor discussed changes in their productivity.

Some of the variables were:

- Giving the workers two 5-minute breaks (which they said they preferred beforehand) and then switching to two 10-minute breaks. Productivity increased, but when they were given six 5-minute breaks, productivity decreased because many rests broke the workers' flow.

- Providing soup or coffee with a sandwich in the morning and snacks in the evening. This increased productivity.

- Changing the end of the workday from 5:00 to 4:30 and eliminating the Saturday workday. This increased productivity.

Changing a variable usually increased productivity, even if the variable was just a change back to the original condition. It is said that this reflects natural adaption to the environment without knowing the objective of the experiment. Researchers concluded that the workers worked harder because they thought that they were being monitored individually.

Researchers hypothesized that choosing one's own coworkers, working as a group, being treated as special (as evidenced by working in a separate room), and having a sympathetic supervisor were the real reasons for the productivity increase. One interpretation, mainly due to Elton Mayo's studies,[12] was that "the six individuals became a team and the team gave itself wholeheartedly and spontaneously to cooperation in the experiment." Further, there was a second relay assembly test room study whose results were not as significant as the first experiment.

Mass interviewing program

The program was conducted from 1928 to 1930 and involved 20,000 interviews. The interviews initially used direct questioning, asking questions related to the supervision and policies of the company involved. The drawback of the direct questioning was that the answers were only "yes" or "no", which was unhelpful for finding the root of problems. Therefore, researchers took to indirect questioning, in which the interviewer would listen. This gave valuable insights about workers' behavior, specifically that the behavior of a worker (or individual) is shaped by group behavior.

Bank wiring room experiments

The purpose of the next study was to find out how payment incentives and small groups would affect productivity. The surprising result was that productivity actually decreased. Workers apparently had become suspicious that their productivity may have been boosted to justify firing some of the workers later on.[13] The study was conducted by Elton Mayo and W. Lloyd Warner between 1931 and 1932 on a group of fourteen men who put together telephone switching equipment. The researchers found that although the workers were paid according to individual productivity, productivity decreased because the men were afraid that the company would lower the base rate. Detailed observation of the men revealed the existence of informal groups or "cliques" within the formal groups. These cliques developed informal rules of behavior as well as mechanisms to enforce them. The cliques served to control group members and to manage bosses; when bosses asked questions, clique members gave the same responses, even if they were untrue. These results show that workers were more responsive to the social force of their peer groups than to the control and incentives of management.

Interpretation and criticism

Richard Nisbett has described the Hawthorne effect as "a glorified anecdote", saying that "once you have got the anecdote, you can throw away the data."[14] Other researchers have attempted to explain the effects with various interpretations. J. G. Adair warned of gross factual inaccuracy in most secondary publications on the Hawthorne effect and that many studies failed to find it.[15] He argued that it should be viewed as a variant of Orne's (1973) experimental demand effect. For Adair, the Hawthorne effect depended on the participants' interpretation of the situation. An implication is that manipulation checks are important in social sciences experiments. He advanced the view that awareness of being observed was not the source of the effect, but participants' interpretation of the situation is critical. How did the participants' interpretation of the situation interact with the participants' goals?

Possible explanations for the Hawthorne effect include the impact of feedback and motivation towards the experimenter. Receiving feedback on their performance may improve their skills when an experiment provides this feedback for the first time.[16] Research on the demand effect also suggests that people may be motivated to please the experimenter, at least if it does not conflict with any other motive.[17] They may also be suspicious of the purpose of the experimenter.[16] Therefore, Hawthorne effect may only occur when there is usable feedback or a change in motivation.

Parsons defined the Hawthorne effect as "the confounding that occurs if experimenters fail to realize how the consequences of subjects' performance affect what subjects do" [i.e. learning effects, both permanent skill improvement and feedback-enabled adjustments to suit current goals]. His key argument was that in the studies where workers dropped their finished goods down chutes, the participants had access to the counters of their work rate.[16]

Mayo contended that the effect was due to the workers reacting to the sympathy and interest of the observers. He discussed the study as demonstrating an experimenter effect as a management effect: how management can make workers perform differently because they feel differently. He suggested that much of the Hawthorne effect concerned the workers feeling free and in control as a group rather than as being supervised. The experimental manipulations were important in convincing the workers to feel that conditions in the special five-person work group were actually different from the conditions on the shop floor. The study was repeated with similar effects on mica-splitting workers.[12]

Clark and Sugrue in a review of educational research reported that uncontrolled novelty effects cause on average 30% of a standard deviation (SD) rise (i.e. 50–63% score rise), with the rise decaying to a much smaller effect after 8 weeks. In more detail: 50% of a SD for up to 4 weeks; 30% of SD for 5–8 weeks; and 20% of SD for > 8 weeks, (which is < 1% of the variance).[18]: 333

Harry Braverman pointed out that the Hawthorne tests were based on industrial psychology and the researchers involved were investigating whether workers' performance could be predicted by pre-hire testing. The Hawthorne study showed "that the performance of workers had little relation to their ability and in fact often bore an inverse relation to test scores ...".[19] Braverman argued that the studies really showed that the workplace was not "a system of bureaucratic formal organisation on the Weberian model, nor a system of informal group relations, as in the interpretation of Mayo and his followers but rather a system of power, of class antagonisms". This discovery was a blow to those hoping to apply the behavioral sciences to manipulate workers in the interest of management.[19]

The economists Steven Levitt and John A. List long pursued without success a search for the base data of the original illumination experiments (they were not true experiments but some authors labeled them experiments), before finding it in a microfilm at the University of Wisconsin in Milwaukee in 2011.[20] Re-analysing it, they found slight evidence for the Hawthorne effect over the long-run, but in no way as drastic as suggested initially.[21] This finding supported the analysis of an article by S. R. G. Jones in 1992 examining the relay experiments.[22][23] Despite the absence of evidence for the Hawthorne effect in the original study, List has said that he remains confident that the effect is genuine.[24]

Gustav Wickström and Tom Bendix (2000) argue that the supposed "Hawthorne effect" is actually ambiguous and disputable, and instead recommend that to evaluate intervention effectiveness, researchers should introduce specific psychological and social variables that may have affected the outcome.[25]

It is also possible that the illumination experiments can be explained by a longitudinal learning effect. Parsons has declined to analyse the illumination experiments, on the grounds that they have not been properly published and so he cannot get at details, whereas he had extensive personal communication with Roethlisberger and Dickson.[16]

Evaluation of the Hawthorne effect continues in the present day.[26][27][28][29] Despite the criticisms, however, the phenomenon is often taken into account when designing studies and their conclusions.[30] Some have also developed ways to avoid it. For instance, there is the case of holding the observation when conducting a field study from a distance, from behind a barrier such as a two-way mirror or using an unobtrusive measure.[31]

Greenwood, Bolton, and Greenwood (1983) interviewed some of the participants in the experiments and found that the participants were paid significantly better.[32]

Trial effect

Various medical scientists have studied possible trial effect (clinical trial effect) in clinical trials.[33][34][35] Some postulate that, beyond just attention and observation, there may be other factors involved, such as slightly better care; slightly better compliance/adherence; and selection bias. The latter may have several mechanisms: (1) Physicians may tend to recruit patients who seem to have better adherence potential and lesser likelihood of future loss to follow-up. (2) The inclusion/exclusion criteria of trials often exclude at least some comorbidities; although this is often necessary to prevent confounding, it also means that trials may tend to work with healthier patient subpopulations.

Secondary observer effect

Despite the observer effect as popularized in the Hawthorne experiments being perhaps falsely identified (see above discussion), the popularity and plausibility of the observer effect in theory has led researchers to postulate that this effect could take place at a second level. Thus it has been proposed that there is a secondary observer effect when researchers working with secondary data such as survey data or various indicators may impact the results of their scientific research. Rather than having an effect on the subjects (as with the primary observer effect), the researchers likely have their own idiosyncrasies that influence how they handle the data and even what data they obtain from secondary sources. For one, the researchers may choose seemingly innocuous steps in their statistical analyses that end up causing significantly different results using the same data; e.g. weighting strategies, factor analytic techniques, or choice of estimation. In addition, researchers may use software packages that have different default settings that lead to small but significant fluctuations. Finally, the data that researchers use may not be identical, even though it seems so. For example, the OECD collects and distributes various socio-economic data; however, these data change over time such that a researcher who downloads the Australian GDP data for the year 2000 may have slightly different values than a researcher who downloads the same Australian GDP 2000 data a few years later. The idea of the secondary observer effect was floated by Nate Breznau in a thus far relatively obscure paper.[36]

Although little attention has been paid to this phenomenon, the scientific implications are very large.[37] Evidence of this effect may be seen in recent studies that assign a particular problem to a number of researchers or research teams who then work independently using the same data to try and find a solution. This is a process called crowdsourcing data analysis and was used in a groundbreaking study by Silberzahn, Rafael, Eric Uhlmann, Dan Martin and Brian Nosek et al. (2015) about red cards and player race in football (i.e. soccer).[38][39]

See also

- Barnum effect

- Demand characteristics

- Goodhart's law

- John Henry effect

- Mass surveillance

- Monitoring and evaluation

- Novelty effect

- Panopticism

- PDCA

- Placebo effect

- Pygmalion effect

- Quantum Zeno effect

- Reflexivity (social theory)

- Scientific management

- Self-determination theory

- Social facilitation

- Stereotype threat

- Subject-expectancy effect

- Time and motion study

- Watching-eye effect

References

- ^ McCarney R, Warner J, Iliffe S, van Haselen R, Griffin M, Fisher P (2007). "The Hawthorne Effect: a randomised, controlled trial". BMC Med Res Methodol. 7: 30. doi:10.1186/1471-2288-7-30. PMC 1936999. PMID 17608932.

- ^ Fox NS, Brennan JS, Chasen ST (2008). "Clinical estimation of fetal weight and the Hawthorne effect". Eur. J. Obstet. Gynecol. Reprod. Biol. 141 (2): 111–114. doi:10.1016/j.ejogrb.2008.07.023. PMID 18771841.

- ^ a b Levitt SD, List JA (2011). "Was there really a Hawthorne effect at the Hawthorne plant? An analysis of the original illumination experiments" (PDF). American Economic Journal: Applied Economics. 3: 224–238. doi:10.1257/app.3.1.224.

- ^ Schonfeld IS, Chang CH (2017). Occupational health psychology: Work, stress, and health. New York: Springer. ISBN 978-0-8261-9967-6.

- ^ Singletary R (March 21, 2017). "Henry Landsberger 1926-2017". Department of Sociology. University of North Carolina at Chapel Hill. Archived from the original on March 30, 2017.

- ^ a b Landsberger HA (1958). Hawthorne Revisited. Ithaca: Cornell University. OCLC 61637839.

- ^ Utts JM, Heckard RF (2021). Mind on Statistics. Cengage Learning. p. 222. ISBN 978-1-337-79488-6.

- ^ Cox E (2000). Psychology for AS Level. Oxford: Oxford University Press. p. 158. ISBN 0198328249.

- ^ Olson, R., Verley, J., Santos, L., Salas, C. (2004). "What We Teach Students About the Hawthorne Studies: A Review of Content Within a Sample of Introductory I-O and OB Textbooks" (PDF). The Industrial-Organizational Psychologist. 41: 23–39. Archived from the original (PDF) on November 3, 2011.

- ^ Elton Mayo, Hawthorne and the Western Electric Company, The Social Problems of an Industrial Civilisation, Routledge, 1949.

- ^ Bowey DA. "Motivation at Work: a key issue in remuneration". Archived from the original on July 1, 2007. Retrieved November 22, 2011.

{{cite web}}: CS1 maint: bot: original URL status unknown (link) - ^ a b Mayo, Elton (1945) Social Problems of an Industrial Civilization. Boston: Division of Research, Graduate School of Business Administration, Harvard University, p. 72

- ^ Henslin JM (2008). Sociology: a down to earth approach (9th ed.). Pearson Education. p. 140. ISBN 978-0-205-57023-2.

- ^ Kolata G (December 6, 1998). "Scientific Myths That Are Too Good to Die". New York Times.

- ^ Adair J (1984). "The Hawthorne Effect: A reconsideration of the methodological artifact" (PDF). Journal of Applied Psychology. 69 (2): 334–345. doi:10.1037/0021-9010.69.2.334. S2CID 145083600. Archived from the original (PDF) on December 15, 2013. Retrieved December 12, 2013.

- ^ a b c d Parsons HM (1974). "What happened at Hawthorne?: New evidence suggests the Hawthorne effect resulted from operant reinforcement contingencies". Science. 183 (4128): 922–932. doi:10.1126/science.183.4128.922. PMID 17756742. S2CID 38816592.

- ^ Steele-Johnson D, Beauregard RS, Hoover PB, Schmidt AM (2000). "Goal orientation and task demand effects on motivation, affect, and performance". The Journal of Applied Psychology. 85 (5): 724–738. doi:10.1037/0021-9010.85.5.724. PMID 11055145.

- ^ Clark RE, Sugrue BM (1991). "30. Research on instructional media, 1978–1988". In G.J.Anglin (ed.). Instructional technology: past, present, and future. Englewood, Colorado: Libraries Unlimited. pp. 327–343.

- ^ a b Braverman H (1974). Labor and Monopoly Capital. New York: Monthly Review Press. pp. 144–145. ISBN 978-0853453406.

- ^ BBC Radio 4 programme More Or Less, "The Hawthorne Effect", broadcast 12 October 2013, presented by Tim Harford with contributions by John List

- ^ Levitt SD, List JA (2011). "Was There Really a Hawthorne Effect at the Hawthorne Plant? An Analysis of the Original Illumination Experiments" (PDF). American Economic Journal: Applied Economics. 3 (1): 224–238. doi:10.1257/app.3.1.224. S2CID 16678444.

- ^ "Light work". The Economist. June 6, 2009. p. 80.

- ^ Jones SR (1992). "Was there a Hawthorne effect?" (PDF). American Journal of Sociology. 98 (3): 451–468. doi:10.1086/230046. JSTOR 2781455. S2CID 145357472.

- ^ Podcast, More or Less 12 October 2013, from 6m 15 sec in

- ^ Wickström G, Bendix T (2000). "The "Hawthorne effect" – what did the original Hawthorne studies actually show?". Scandinavian Journal of Work, Environment & Health. 26 (4). Scandinavian Journal of Work, Environment and Health: 363–367. doi:10.5271/sjweh.555.

- ^ Kohli E, Ptak J, Smith R, Taylor E, Talbot EA, Kirkland KB (2009). "Variability in the Hawthorne effect with regard to hand hygiene performance in high- and low-performing inpatient care units". Infect Control Hosp Epidemiol. 30 (3): 222–225. doi:10.1086/595692. PMID 19199530. S2CID 19058173.

- ^ Cocco G (2009). "Erectile dysfunction after therapy with metoprolol: the hawthorne effect". Cardiology. 112 (3): 174–177. doi:10.1159/000147951. PMID 18654082. S2CID 41426273.

- ^ Leonard KL (2008). "Is patient satisfaction sensitive to changes in the quality of care? An exploitation of the Hawthorne effect". J Health Econ. 27 (2): 444–459. doi:10.1016/j.jhealeco.2007.07.004. PMID 18192043.

- ^ "What is Hawthorne Effect?". MBA Learner. February 22, 2018. Archived from the original on February 26, 2018. Retrieved February 25, 2018.

- ^ Salkind N (2010). Encyclopedia of Research Design, Volume 2. Thousand Oaks, CA: Sage Publications, Inc. p. 561. ISBN 978-1412961271.

- ^ Kirby M, Kidd W, Koubel F, Barter J, Hope T, Kirton A, Madry N, Manning P, Triggs K (2000). Sociology in Perspective. Oxford: Heinemann. pp. G-359. ISBN 978-0435331603.

- ^ Greenwood RG, Bolton AA, Greenwood RA (1983). "Hawthorne a Half Century Later: Relay Assembly Participants Remember". Journal of Management. 9 (2): 217–231. doi:10.1177/014920638300900213. S2CID 145767422.

- ^ Menezes P, Miller WC, Wohl DA, Adimora AA, Leone PA, Eron JJ (2011). "Does HAART efficacy translate to effectiveness? Evidence for a trial effect". PLoS ONE. 6 (7): e21824. Bibcode:2011PLoSO...621824M. doi:10.1371/journal.pone.0021824. PMC 3135599. PMID 21765918.

{{citation}}: CS1 maint: postscript (link) - ^ Braunholtz DA, Edwards SJ, Lilford RJ (2001). "Are randomized clinical trials good for us (in the short term)? Evidence for a "trial effect"". J Clin Epidemiol. 54 (3): 217–224. doi:10.1016/s0895-4356(00)00305-x. PMID 11223318.

{{citation}}: CS1 maint: postscript (link) - ^ McCarney R, Warner J, Iliffe S, van Haselen R, Griffin M, Fisher P (2007). "The Hawthorne Effect: a randomised, controlled trial". BMC Medical Research Methodology. 7: 30. doi:10.1186/1471-2288-7-30. PMC 1936999. PMID 17608932.

{{citation}}: CS1 maint: postscript (link) - ^ Breznau N (May 3, 2016). "Secondary observer effects: idiosyncratic errors in small-N secondary data analysis". International Journal of Social Research Methodology. 19 (3): 301–318. doi:10.1080/13645579.2014.1001221. ISSN 1364-5579. S2CID 145402768.

- ^ Shi Y, Sorenson O, Waguespack D (January 30, 2017). "Temporal Issues in Replication: The Stability of Centrality-Based Advantage". Sociological Science. 4: 107–122. doi:10.15195/v4.a5. ISSN 2330-6696.

- ^ Silberzahn R, Uhlmann EL, Martin DP, Nosek BA, et al. (2015). "Many analysts, one dataset: Making transparent how variations in analytical choices affect". OSF.io. Retrieved December 7, 2016.

- ^ "Crowdsourcing Data to Improve Macro-Comparative Research". Policy and Politics Journal. March 26, 2015. Retrieved December 7, 2016.

- Ciment, Shoshy. “Costco Is Offering an Additional $2 an Hour to Its Hourly Employees across the US as the Coronavirus Outbreak Causes Massive Shopping Surges.” Business Insider, Business Insider, 23 Mar. 2020, www.businessinsider.com/costco-pays-workers-2-dollars-an-hour-more-coronavirus-2020-3.

- Miller, Katherine, and Joshua Barbour. Organizational Communication: Approaches and Processes 7th Edition. Cengage Learning, 2014.

External links

- The Hawthorne, Pygmalion, placebo and other expectancy effects: some notes, by Stephen W. Draper, Department of Psychology, University of Glasgow.

- BBC Radio 4: Mind Changers: The Hawthorne Effect

- Harvard Business School and the Hawthorne Experiments (1924–1933), Harvard Business School.